(NOTE: This article is a slightly abbreviated and edited version of a blog originally published in May 2023.)

In 2006, British mathematician and entrepreneur Clive Humby proclaimed that “data is the new oil.”

At the time, his enthusiastic (if not exaggerated) comment reflected the fervor and faith in the then expanding internet economy. And his metaphor had some weight, too. Like oil, data can be collected (or maybe one should say extracted), refined, and sold. Both of these are also in high demand, and just as the inappropriate or excessive use of oil has deleterious effects on the planet, so may the reckless use of data.

Recently, the newest oil concerning many, one that is shaking up the knowledge workplace, is ChatGPT. Released by OpenAI in November 2022, ChatGPT combines chatbot functionality with a very clever language model. Or to be more precise, the GPT in its name stands for Generative Pre-trained Transformer.

Global Campus previously published a blog about robots in the workplace. One of the concerns raised then was that of AI taking away our jobs. But perhaps, now, the even bigger concern is AI doing our writing, generating our essays, or even our TV show scripts. That is, many are worried about AI substituting for both our creative and critical thinking.

Training Our AI Writing Helper

ChatGPT is not an entirely new technology. That is, experts have long integrated large language models into customer service chatbots, Google searches, and autocomplete e-mail features. The ChatGPT of today is an updated version of GPT-3, which has been around since 2020. But ChatGPT’s origins go further back. Almost 60 years ago, MIT’s Joseph Weizenbaum rolled out ELIZA: the first chatbot. Named after Eliza Doolittle, this chatbot mimicked a Rogerian therapist by (perhaps annoyingly) rephrasing questions. If someone asked, for instance, “My father hates me,” it would reply with another question: “Why do you say your father hates you?” And so on.

The current ChatGPT’s immense knowledge and conversational ability are indeed impressive. To acquire these skills, ChatGPT was “trained on huge amounts of data from the Internet, including conversations.” An encyclopedia of text-based data was combined with a “machine learning technique called Reinforcement Learning from Human Feedback (RLHF).” This is a technique in which human trainers provided the model with conversations in which they played both the AI chatbot and the user.” In other words, this bot read a lot of text and practiced mimicking human conversations. Its responses, nonetheless, are not based on knowing the answers, but on predicting what words will come next in a series.

The results of this training is that this chatbot is almost indistinguishable from the human voice. And it’s getting better, too. As chatbot engages with more users, its tone and conversations become increasingly life-like (OpenAI).

Using ChatGPT for Mundane Writing Tasks

Many have used, tested, and challenged ChatGPT. Although one can’t say for certain that the bot always admits its mistakes, it definitely rejects inappropriate requests. It will deliver some clever pick-up lines. However, it won’t provide instructions for cheating on your taxes or on your driver’s license exam. And if you ask it what happens after you die, it is suitably dodgy.

But what makes ChatGPT so popular, and some would say dangerous, is the plethora of text-based documents it can produce, such as the following:

- Long definitions

- Emails and letters

- Scripts for podcasts and videos

- Speeches

- Basic instructions

- Quiz questions

- Discussion prompts

- Lesson plans

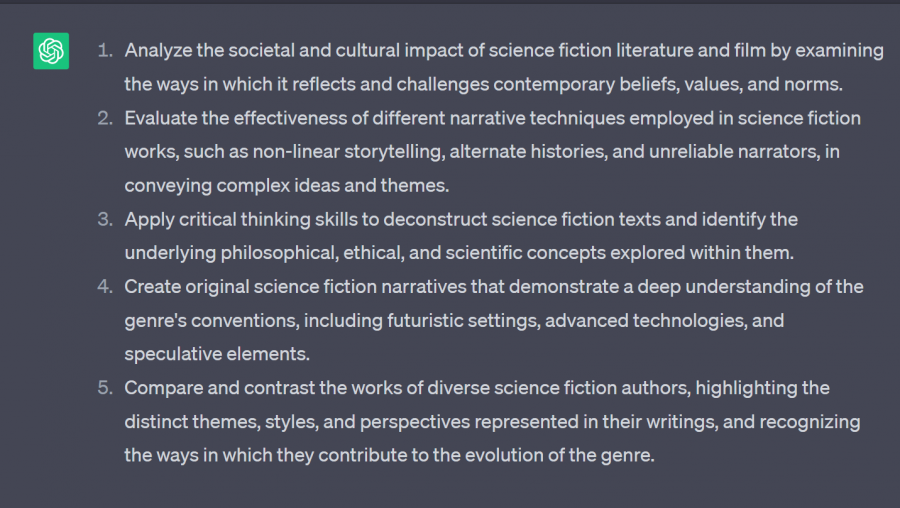

- Learning objectives

- Designs for rubrics

- Outlines for reports and proposals

- Summaries of arguments

- Press releases

- Essays

And this is the short list, too, of its talents. That is, there are people who have used this friendly bot to construct emails to students, quiz questions, and definitions. The internet is also awash with how-to articles on using ChatGPT to write marketing copy, generate novels, and speeches. Noy and Zhang even claim that this “generative writing tool increases the output quality of low-ability workers while reducing their time spent, and it allows high-ability workers to maintain their quality standards while becoming significantly faster.”

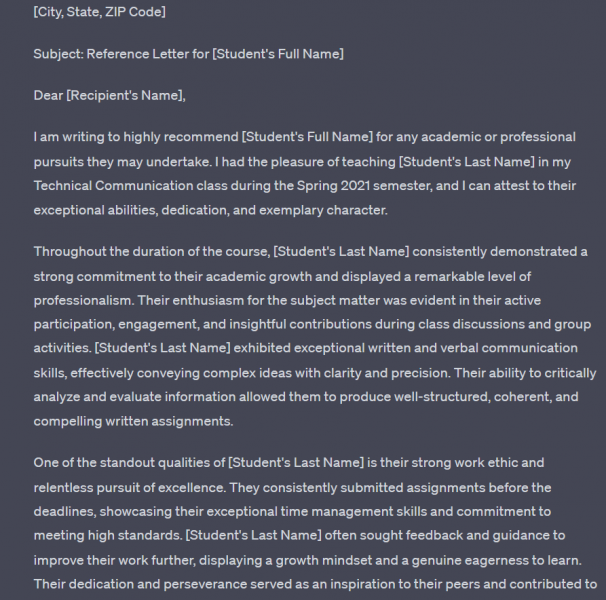

Below are examples of two onerous writing tasks assigned to ChatGPT: a reference letter and learning goals.

Recognizing ChatGPT’s Limited Knowledge

Despite helping writers with mundane tasks, this artificial intelligence helper does have its limitations. First of all, it is only as wise as its instructions. For instance, the effusive reference letter above resulted from it having no guidance about length or tone. ChatGPT just threw everything in the written soup.

This AI helper also makes mistakes. In fact, right on the first page, OpenAI honestly admits that its chatbot “may occasionally generate incorrect information, and produce harmful instructions or biased content.” It also has “limited knowledge of the world and events after 2021.”

And it reveals these gaps, often humorously.

For instance, when prodded to provide information on several well-known professors from various departments, it came back with wrong answers. In fact, it actually misidentified one well-known department chair as a Floridian famous for his philanthropy and footwear empire. In this case, ChatGPT not only demonstrated “limited knowledge of the world” but also incorrect information. As academics, writers, and global citizens, we should be concerned about releasing more fake information into the world.

Taking into consideration these and other errors, one wonders on what data, exactly, was ChatGPT trained. Did it, for instance, just skip over universities? Academics? Respected academics with important accomplishments? As we know, what the internet prioritizes says a lot about what it and its users value.

Creating Errors

There are other limitations. OpenAi’s ChatGPT can’t write a self-reflection or decent poetry. And because it is not online, it cannot summarize recent content from the internet.

It also can’t approximate the tone of this article, which shifts between formal and informal and colloquial. Or whimsically insert allusions or pop culture references.

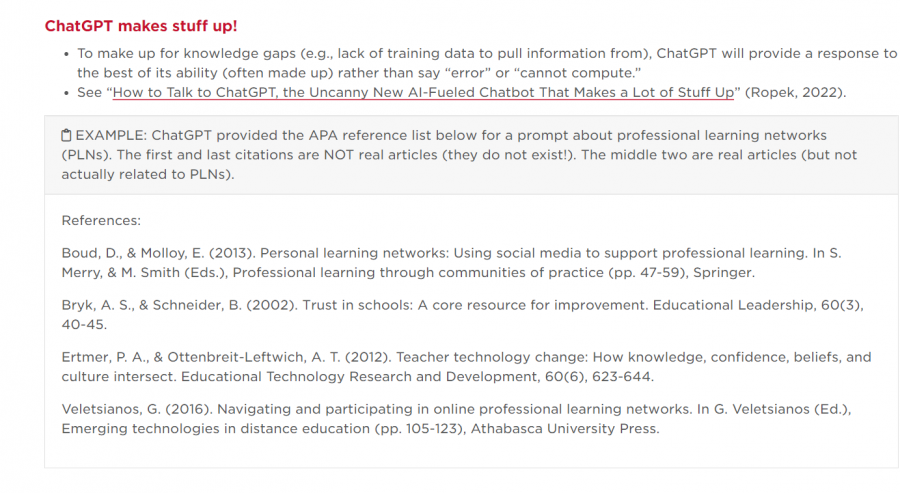

To compensate for its knowledge gaps, ChatGPT generates answers that are incorrect or slightly correct.

In the case of generating mistakes, ChatGPT does mimic the human tendency to fumble, to tap dance around an answer, and to make up material rather than humbly admit ignorance.

Passing Along Misinformation

Being trained on text-based data, which might have been incorrect in the first place, ChatGPT often passes this fakery along. That is, it also (as the example above shows) has a tendency to generate or fabricate fake references and quotations.

It can also spread misinformation. (Misinformation, unintentional false or inaccurate information, is different from disinformation: the intentional spread of untruths to deceive.)

The companies CNET and Bankrate found out this glitch the hard way. For months, they had been duplicitously publishing AI-generated informational articles as human-written articles under a byline. When this unethical behavior was discovered, it drew the ire of the internet.

CNET’s stories even contained both plagiarism and factual mistakes, or what Jon Christian at Futurism called “bone-headed errors.” Christian humorously drew attention to mathematical mistakes that were delivered with all the panache of a financial advisor. For instance, the article claimed that “if you deposit $10,000 into a savings account that earns 3% interest compounding annually, you’ll earn $10,300 at the end of the first year.” In reality, you’d be earning only $300.

All three screwups. . . . highlight a core issue with current-generation AI text generators: while they’re legitimately impressive at spitting out glib, true-sounding prose, they have a notoriously difficult time distinguishing fact from fiction.

Revealing Biases

And ChatGPT is not unbiased either. First, this bot has a strong US leaning. For instance, it was prompted to write about the small town of Wingham, ON. In response, it generated some sunny, non-descript prose. However, it omitted this town’s biggest claim to fame: the birthplace of Nobel Prize winning Alice Munro.

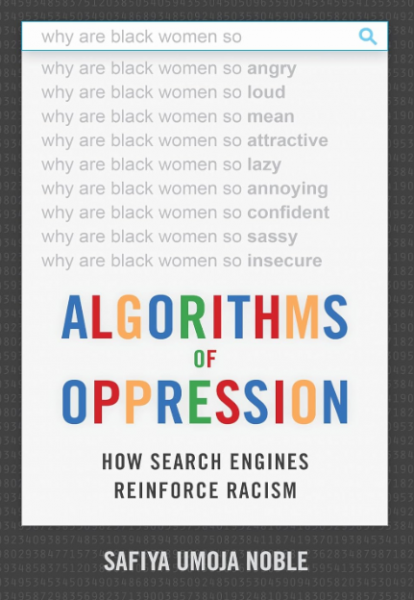

The bias is based on ChatGPT being trained on data pulled from the internet. Thus, it reflects all the prejudices of those who wrote and compiled this information.

This problem was best articulated by Safiya Umoja Nobel in her landmark book Algorithms of Oppression.

In this text, she challenges the ideal that search engines are value-neutral, exposing their hegemonic norms and the consequences of their various sexist, racist biases. ChatGPT, to be sure, is also affected by if not infected with these biases.

What really made me lose confidence in ChatGPT is when I asked if the United States ever had a president with African ancestry, and it answered no, then apologized after I reminded the chatbot about Barack Obama.

Despite agreeing with Nobel’s and Abdul-Alim’s very serious concerns, and thinking that ChatGPT can be remarkably dumb at times, many may not want to smash the algorithmic machines anytime soon. Furthermore, there are writers who do use this bot to generate correct definitions of unfamiliar technical terms encountered in their work. For instance, it can help non-experts understand the basics of such concepts as computational fluid dynamics and geospatial engineering. Still, many professionals choose not to rely on it, nor trust it, too much.

Letting Robots Do Your Homework

But it is students’ trust in and reliance on OpenAI that is causing chaos and consternation in the education world.

That is, many 2022 cases of cheating were connected to one of this bot’s most popular features: its impressive ability to generate essays in seconds. For instance, it constructed a 7-paragraph comparison/contrast essay on Impressionism and Post-Impressionism in under a minute.

And the content of this essay, though vague, does hold some truth: “Impressionism had a profound impact on the art world, challenging traditional academic conventions. Its emphasis on capturing the fleeting qualities of light and atmosphere paved the way for modern art movements. Post-impressionism, building upon the foundations of impressionism, further pushed the boundaries of artistic expression. Artists like Georges Seurat developed the technique of pointillism, while Paul Gauguin explored new avenues in color symbolism. The post-impressionists’ bold experimentation influenced later art movements, such as fauvism and expressionism.”

With a few modifications and a checking of facts, this text would fit comfortably into an introductory art textbook. Or maybe a high-school or a college-level essay.

Sounding the Alarm About ChatGPT

Very shortly after people discovered this essay-writing feature, stories of academic integrity violations flooded the internet. An instructor at an R1 STEM grad program confessed that several students had cheated on a project report milestone. “All 15 students are citing papers that don’t exist.” An alarming article from The Chronicle of Higher Education, written by a student, warned that educators had no idea how much students were using AI. The author rejected the claim that AI’s voice is easy to detect. “It’s very easy to use AI to do the lion’s share of the thinking while still submitting work that looks like your own.”

And it’s not just a minority of students using ChatGPT either. In a study.com survey of 200 K-12 teachers, 26% had already caught a student cheating by using this tool. In a BestColleges survey of 1,000 current undergraduate and graduate students (March 2023), 50% of students admitted to using AI for some portion of their assignment, 30% for the majority, and 17% had “used it to complete an assignment and turn it in with no edits.”

Soon, after publications like Forbes and Business Insider began pushing out articles about rampant cheating,the internet was buzzing. An elite program in a Florida high school reported a chatbot “cheating scandal”. But probably the most notorious episode was a student who used this bot to write an essay for his Ethics and Artificial Intelligence course. Sadly, the student did not really understood the point of the assignment.

Incorporating ChatGPT in the Classroom

According to a Gizmodo article, many schools have forbidden ChatGPT, such as those in New York City, Los Angeles, Seattle, Fairfax County Virginia.

But there is still a growing body of teachers who aren’t that concerned. Many don’t want to ban ChatGPT altogether. Eliminating this tool from educational settings, they caution, will do far more harm than good. Instead, they argue that teachers must set clearer writing expectations about cheating. They should also create ingenious assignments that students can’t hack with their ChatGPT writing coach, as well as construct learning activities that reveal this tool’s limitations.

Others have suggested that the real problem is that of teachers relying on methods of assessment that are too ChatGPT-hackaable: weighty term papers and final exams on generic topics. Teachers may need to rethink their testing strategies, or as that student from the Chronicle asserted, “[M]assive structural change is needed if our schools are going to keep training students to think critically.”

Sam Altman, CEO of OpenAI, also doesn’t agree with all the hand-wringing about ChatGPT cheating. He blithely suggested that schools need to “get over it.”

Generative text is something we all need to adapt to . . . . We adapted to calculators and changed what we tested for in math class, I imagine. This is a more extreme version of that, no doubt, but also the benefits of it are more extreme, as well.

Read MTU’s own Rod Bishop’s guidance on ChatGPT in the university classroom. And think about your stance on this little AI writing helper.