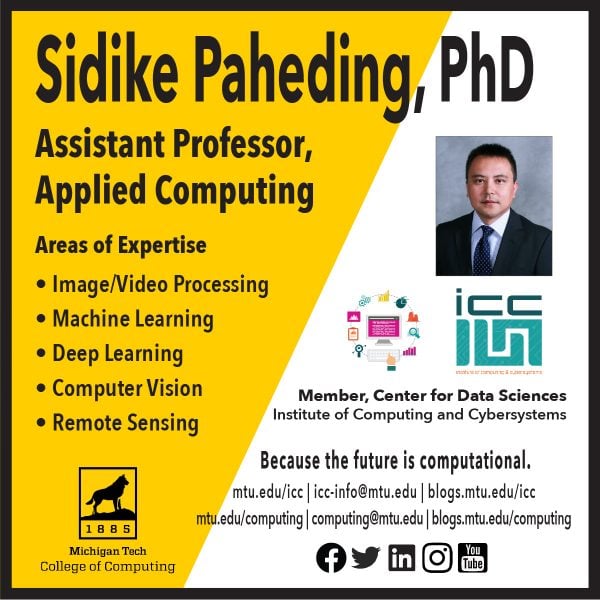

A scholarly paper co-authored by Assistant Professor Sidike Paheding, Applied Computing, is one of two papers to receive the 2020 Best Paper Award from the open-access journal Electronics, published by MDPI.

The paper presents a brief survey on the advances that have occurred in the area of Deep Learning.

Paheding is a member of the Institute of Computing and Cybersystems’ (ICC) Center for Data Sciences (DataS).

Co-authors of the article, “A State-of-the-Art Survey on Deep Learning Theory and Architectures,” are Md Zahangir Alom, Tarek M. Taha, Chris Yakopcic, Stefan Westberg, Mst Shamima Nasrin, Mahmudul Hasan, Brian C. Van Essen, Abdul A. S. Awwal, and Vijayan K. Asari. The paper was published March 5, 2019, appearing in volume 8, issue 3, page 292, of the journal.

Papers were evaluated for originality and significance, citations, and downloads. The authors receive a monetary award , a certificate, and an opportunity to publish one paper free of charge before December 31, 2021, after the normal peer review procedure.

Electronics is an international peer-reviewed open access journal on the science of electronics and its applications. It is published online semimonthly by MDPI.

MDPI, a scholarly open access publishing venue founded in 1996, publishes 310 diverse, peer-reviewed, open access journals.

Paper Abstract

In recent years, deep learning has garnered tremendous success in a variety of application domains. This new field of machine learning has been growing rapidly and has been applied to most traditional application domains, as well as some new areas that present more opportunities. Different methods have been proposed based on different categories of learning, including supervised, semi-supervised, and un-supervised learning. Experimental results show state-of-the-art performance using deep learning when compared to traditional machine learning approaches in the fields of image processing, computer vision, speech recognition, machine translation, art, medical imaging, medical information processing, robotics and control, bioinformatics, natural language processing, cybersecurity, and many others.

This survey presents a brief survey on the advances that have occurred in the area of Deep Learning (DL), starting with the Deep Neural Network (DNN). The survey goes on to cover Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), including Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), Auto-Encoder (AE), Deep Belief Network (DBN), Generative Adversarial Network (GAN), and Deep Reinforcement Learning (DRL). Additionally, we have discussed recent developments, such as advanced variant DL techniques based on these DL approaches. This work considers most of the papers published after 2012 from when the history of deep learning began.

Furthermore, DL approaches that have been explored and evaluated in different application domains are also included in this survey. We also included recently developed frameworks, SDKs, and benchmark datasets that are used for implementing and evaluating deep learning approaches. There are some surveys that have been published on DL using neural networks and a survey on Reinforcement Learning (RL). However, those papers have not discussed individual advanced techniques for training large-scale deep learning models and the recently developed method of generative models.