Your Business Systems Aren’t Just Software Anymore.

If you’ve ever thought, “Why does it take three dashboards, two spreadsheets, and a weekly meeting to answer a simple business question?” you’re already bumping into the problems that Business Information Systems are meant to solve. And you’ve probably already had a close encounter with how AI is changing the game.

A Business Information System (BIS) is the setup of tools, software, and processes organizations use to gather, store, and analyze business data. A BIS allows teams to understand information, such as sales trends, customer behavior, inventory levels, financial performance, and more.

Most organizations rely heavily on business information systems. Below are a few examples and their capabilities.

- ERP (Enterprise Resource Planning): Integrates core operations (finance, purchasing, inventory, manufacturing, and HR) into one system (e.g., SAP, Oracle).

- CRM (Customer Relationship Management): Manages customer data and interactions, such as sales pipelines, marketing campaigns, service cases (e.g., Salesforce).

- HRIS (Human Resource Information System): Handles HR data and processes, such as payroll, benefits, recruiting, and performance (e.g., Workday).

- SCM (Supply Chain Management): Plans and manages supply chain activities, such as procurement, logistics, warehousing, distribution.

- BI (Business Intelligence): Tools for dashboards, reporting, and analysis to support decisions (e.g., Power BI, Tableau).

- DSS (Decision Support System): Combines data and models to help evaluate options (pricing, capacity, risk scenarios).

- WMS (Warehouse Management System): Tracks warehouse inventory, picking/packing, replenishment, and shipping.

- POS (Point of Sale): Captures sales transactions in retail and often links to inventory and customer data.

The previous problem was this one: for the longest time, most of these systems still behaved like filing cabinets. They stored information, but they didn’t think with you. Or even like you.

But that scenario has been changing fast.

How AI for Business Information Systems Works (In Real Life).

That is, updated and new Business Information Systems are increasingly becoming adept at answering plain-English questions: Why are returns up? What’s driving churn? What happens if we change lead times? And then providing useful answers with recommended next steps.

These insights demonstrate how AI has transformed BIS. That is, instead of reporting only what happened, AI-enhanced BIS can learn from data, spot patterns, flag risks, forecast demand, and support better and quicker decisions through recommendations and automation. In other words, it can help teams move from “What happened?” to “What should we do next?”

In day-to-day business operations, then, AI often shows up in several practical but high-impact ways:

- Forecasting demand more accurately (so you’re not overstocked or out of stock)

- Improving supply chain and logistics (inventory levels, shipping routes, lead times)

- Assessing risk and detecting anomalies (fraud, compliance flags, unusual transactions)

- Automating reporting and surfacing insights (less manual work, more usable information)

- Helping teams interact with enterprise systems using natural language (ask a question, get an answer, think about next steps)

Some Recent Examples of AI Being Embedded into Platforms

Many organizations have already embedded AI into the software platforms on which they heavily rely.

- March 2023: Microsoft announced Dynamics 365 Copilot (for CRM and ERP workflows). The company then offered general availability of Copilot for Sales and Copilot for Service in February 2024.

- June 2023: ServiceNow introduced Now Assist for Virtual Agent (generative AI for more intelligent self-service)

- September 2023: SAP launched Joule, a generative AI assistant embedded across SAP solutions

- March 2024: Oracle incorporated new generative AI capabilities within Oracle Fusion Cloud Applications

- April 2024: Salesforce announced general availability of Einstein Copilot (a conversational AI assistant for CRM)

Case Study: Embedding AI into CRM to Support Workflow and Reduce Response Times

Industrial Scientific’s support team was overwhelmed and morale was declining. Why? The team was handling upwards of 1,200 requests/month (device issues, troubleshooting, documentation). To fulfill these requests, employees often had to consult multiple departments. As a result, response times stretched to days, damaging the customer experience.

To solve this program, the organization built an AI support pipeline (“SensAI”) that connects Salesforce (CRM) with Oracle Cloud Infrastructure generative AI and a documentation knowledge base. When an inquiry lands in Salesforce, it triggers an automated workflow that uses RAG (retrieval-augmented generation) over product docs. Through Salesforce, it then returns a response that is tailored to the customer’s support contract.

As a result, response times dropped from days to minutes (near-immediate support for routine inquiries). The system responded to 2,230+ emails and cut 185+ hours of staff time, not only saving money but also reducing a ton of employee stress.

The Bottom line: AI is no longer just a tool on the side; it is quickly becoming the interface and accelerator for business systems.

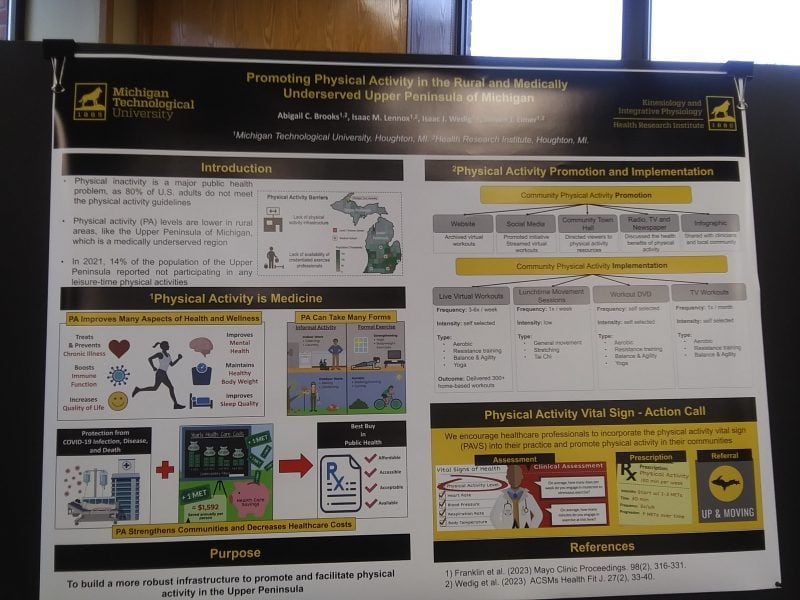

Earn Michigan Tech’s Online Certificate in AI for Business Information Systems.

If you want to evaluate AI tools, implement them responsibly, and translate AI into measurable outcomes, you need a foundation in both AI concepts and business information systems. Michigan Tech’s online graduate certificate in AI for Business Information Systems is built to provide exactly that, demonstrating how AI integrates with core business systems to streamline decision-making, improve accuracy, and drive cost savings.

In just 9 credits, you’ll complete two required courses: BA 5200: Information System Management and Data Analytics and MIS 5000: Emerging Technologies. Then, choose one elective: MIS 4400: Business Intelligence and Analytics or MIS 5100: Information Systems Projects.

This certificate is a strong fit for leaders, business professionals, managers, and IT analysts who want to guide AI adoption, strengthen BI/analytics capabilities, or move into digital transformation and AI-enabled roles.