Bo Chen, assistant professor of computer science and member of the Institute of Computing and Cybersystems Center for Cybersecurity, is the principal investigator on a project that has received a $249,918 research and development grant from the National Science Foundation. The project is entitled, “SaTC: CORE: Small: Collaborative: Hardware-Assisted Plausibly Deniable System for Mobile Devices.” This is a potential three-year project.

Abstract: Mobile computing devices typically use encryption to protect sensitive information. However, traditional encryption systems used in mobile devices cannot defend against an active attacker who can force the mobile device owner to disclose the key used for decrypting the sensitive information. This is particularly of concern to dissident users who are targets of nation states. An example of this would be a human rights worker collecting evidence of untoward activities in a region of oppression or conflict and storing the same in an encrypted form on the mobile device, and then being coerced to disclose the decryption key by an official. Plausibly Deniable Encryption (PDE) has been proposed to defend against such adversaries who can coerce users into revealing the encrypted sensitive content. However, existing techniques suffer from several problems when used in flash-memory-based mobile devices, such as weak deniability because of the way read/write/erase operations are handled at the operating systems level and at the flash translation layer, various types of side channel attacks, and computation and power limitations of mobile devices. This project investigates a unique opportunity to develop an efficient (low-overhead) and effective (high-deniability) hardware-assisted PDE scheme on mainstream mobile devices that is robust against a multi snapshot adversary. The project includes significant curriculum development activities and outreach activities to K-12 students.

This project fundamentally advances the mobile PDE systems by leveraging existing hardware features such as flash translation layer (FTL) firmware and TrustZone to achieve a high deniability with a low overhead. Specifically, this project develops a PDE system with capabilities to: 1) defend against snapshot attacks using raw flash memory on mobile devices; and 2) eliminate side-channel attacks that compromise deniability; 3) be scalable to deploy on mainstream mobile devices; and 4) efficiently provide usable functions like fast mode switching. This project also develops novel teaching material on PDE and cybersecurity for K-12 students and the Regional Cybersecurity Education Collaboration (RCEC), a new educational partnership on cybersecurity in Michigan.

Publications related to this research:

[DSN ’18] Bing Chang, Fengwei Zhang, Bo Chen, Yingjiu Li, Wen Tao Zhu, Yangguang Tian, Zhan Wang, and Albert Ching. MobiCeal: Towards Secure and Practical Plausibly Deniable Encryption on Mobile Devices. The 48th IEEE/IFIP International Conference on Dependable Systems and Networks (DSN ’18), June 2018 (Acceptance rate: 28%)

[Cybersecurity ’18] Qionglu Zhang, Shijie Jia, Bing Chang, Bo Chen. Ensuring Data Confidentiality via Plausibly Deniable Encryption and Secure Deletion – A Survey. Cybersecurity (2018) 1: 1.

[ComSec ’18 ] Bing Chang, Yao Cheng, Bo Chen, Fengwei Zhang, Wen Tao Zhu, Yingjiu Li, and Zhan Wang. User-Friendly Deniable Storage for Mobile Devices. Elsevier Computers & Security, vol. 72, pp. 163-174, January 2018

[CCS ’17] Shijie Jia, Luning Xia, Bo Chen, and Peng Liu. DEFTL: Implementing Plausibly Deniable Encryption in Flash Translation Layer. 2017 ACM Conference on Computer and Communications Security (CCS ’17), Dallas, Texas, USA, Oct 30 – Nov 3, 2017 (Acceptance rate: 18%)

[ACSAC ’15] Bing Chang, Zhan Wang, Bo Chen, and Fengwei Zhang. MobiPluto: File System Friendly Deniable Storage for Mobile Devices. 2015 Annual Computer Security Applications Conference (ACSAC ’15), Los Angeles, California, USA, December 2015 (Acceptance rate: 24.4%)

[ISC ’14] Xingjie Yu, Bo Chen, Zhan Wang, Bing Chang, Wen Tao Zhu, and Jiwu Jing. MobiHydra: Pragmatic and Multi-Level Plausibly Deniable Encryption Storage for Mobile Devices. The 17th Information Security Conference (ISC ’14), Hong Kong, China, Oct. 2014

Link to more information about this project: https://snp.cs.mtu.edu/research/index.html#pde

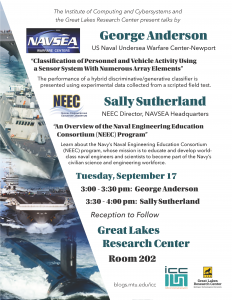

George Anderson and Sally Sutherland of the

George Anderson and Sally Sutherland of the