Shane Mueller (CLS/ICC-HCC) will be presenting at the National Academy of Sciences/Air Force Research Laboratory (AFRL) Human-AI Teaming Through Warfighter-Centered Designs Workshop this afternoon (July 29) at 2:30 p.m.

Mueller’s presentation is titled “Human-centered approaches for explainable AI.”

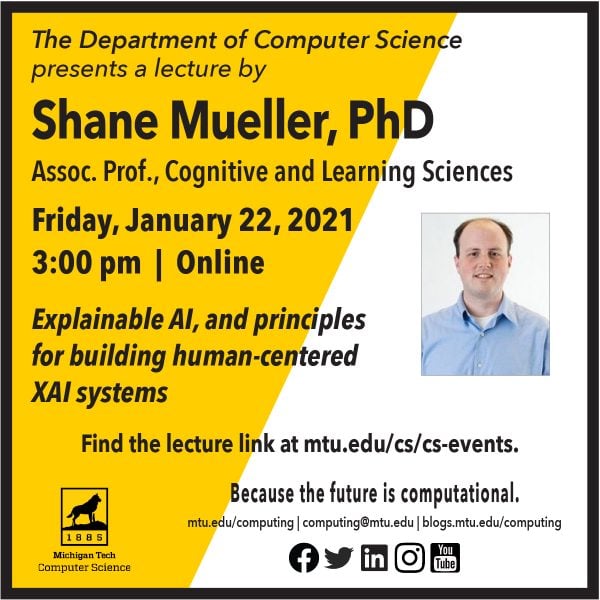

The Department of Computer Science will present a lecture, by Dr. Shane Mueller on Friday, January 22, 2021, at 3:00 p.m.

Mueller is an associate professor in the Applied Cognitive Science and Human Factors program of the Cognitive and Learning Science department. His lecture is titled, “Explainable AI, and principles for building human-centered XAI systems.”

Mueller’s research focuses on human memory and the representational, perceptual, strategic, and decisional factors that support it. He employs applied and basic research methodologies, typically with a goal of implementing formal quantitative mathematical or computational models of cognition and behavior.

He is also the primary developer of the Psychology Experiment Building Language (PEBL), a software platform for creating psychology experiments.

Mueller has undergraduate degrees in mathematics and psychology from Drew University, and a Ph.D. in cognitive psychology from the University of Michigan. He was a senior scientist at Klein Associates Division of Applied Research Associates from 2006 to 2011. His research has been supported by NIH, DARPA, IARPA, the Air Force Research Laboratory, the Army Research Institute, the Defense Threat Reduction Agency, and others.

Lecture Title:

Explainable AI, and principles for building human-centered XAI systems

Lecture Abstract

In recent years, Explainable Artificial Intelligence (XAI) has re-emerged in response to the development of modern AI and ML systems. These systems are complex and sometimes biased, but they nevertheless make decisions that impact our lives. XAI systems are frequently algorithm-focused; starting and ending with an algorithm that implements a basic untested idea about explainability. These systems are often not tested to determine whether the algorithm helps users accomplish any goals, and so their explainability remains unproven. I will discuss some recent advances and approaches to developing XAI, and describe how many of these systems are likely to incorporate many of the lessons from past successes and failures to build explainable systems. I will then review some of the basic concepts that have been used for user-centered XAI systems over the past 40 years of research. Based on this, I will describe a set of empirically-grounded, human user-centered design principles that may guide developers to create successful explainable systems.

Shane Mueller (CLS/HCC) was awarded DARPA’s Explainable AI (XAI) Grant to develop naturalistic theories of explanation with AI systems and a computational cognitive model of explanatory reasoning. This is a four-year grant in the amount of $808,450.

ICC Annual Retreat was held on April 21. Co-Director Dan Fuhrmann presented ICC Achievement Awards to two researchers for their outstanding research and honorable contributions to the ICC in 2017. Zhuo Feng from the Center for Scalable Architectures and Systems (SAS) and Shane Mueller from the Center for Human-Centered Computing (HCC) were this year’s recipients.

ICC Annual Retreat was held on April 21. Co-Director Dan Fuhrmann presented ICC Achievement Awards to two researchers for their outstanding research and honorable contributions to the ICC in 2017. Zhuo Feng from the Center for Scalable Architectures and Systems (SAS) and Shane Mueller from the Center for Human-Centered Computing (HCC) were this year’s recipients.

Shane Mueller is Associate Professor in the Department of Cognitive and Learning Sciences with an expertise in Cognitive and Computational Modeling. He has recently been awardedDARPA’s Explainable AI (XAI) Grant to develop naturalistic theories of explanation with AI systems and to develop a computational cognitive model of explanatory reasoning. In addition to this effort, he has served as Co-PI of several proposals in collaboration with other HCC members from the KIP, CS, and Math departments. He has continuously published his works in top journals and conferences, such as IEEE and Cognitive Modeling Communities and organized several conferences. Another significant achievement is developing PEBL: The Free Psychology Experiment Building Language for HCI and Psychology Researchers, which is widely used across the world. Zhuo Feng is Associate Professor in the Department of Electrical and Computer Engineering. Zhuo has received funding as the sole PI on three National Science Foundation (NSF) grants since 2014 with a total of $1.1 million. He received a Faculty Early Career Development (CAREER) Award from NSF in 2014, a Best Paper Award from ACM/IEEE Design Automation Conference (DAC) in 2013, and two Best Paper AwardNominations from IEEE/ACM International Conference on Computer-Aided Design (ICCAD) in 2006 and 2008. His publications include 16 journal papers (14 IEEE/ACM Transactions) and 34 ACM/IEEE conference papers.